1. Early Computers:

- 1940s-1950s: The first electronic computers were massive machines that occupied entire rooms. They relied on vacuum tubes for processing and were primarily used for scientific and military purposes. Examples include the ENIAC (1946) and UNIVAC (1951).

2. Transistors and Miniaturization:

- 1950s-1960s: The invention of transistors in the late 1940s revolutionized computing by replacing bulky and unreliable vacuum tubes. Transistors were smaller, more reliable, and consumed less power, allowing for the development of smaller computers. This era saw the rise of mainframes like IBM’s System/360 (1964), which set standards for compatibility and scalability in computing.

3. Integrated Circuits:

- 1960s-1970s: The integration of multiple transistors onto a single silicon chip, known as the integrated circuit (IC), was a monumental breakthrough. This technology, pioneered by Jack Kilby and Robert Noyce in the late 1950s, dramatically increased computing power while reducing size and cost. Mainframes evolved into smaller, more versatile minicomputers, and the concept of microprocessors began to take shape.

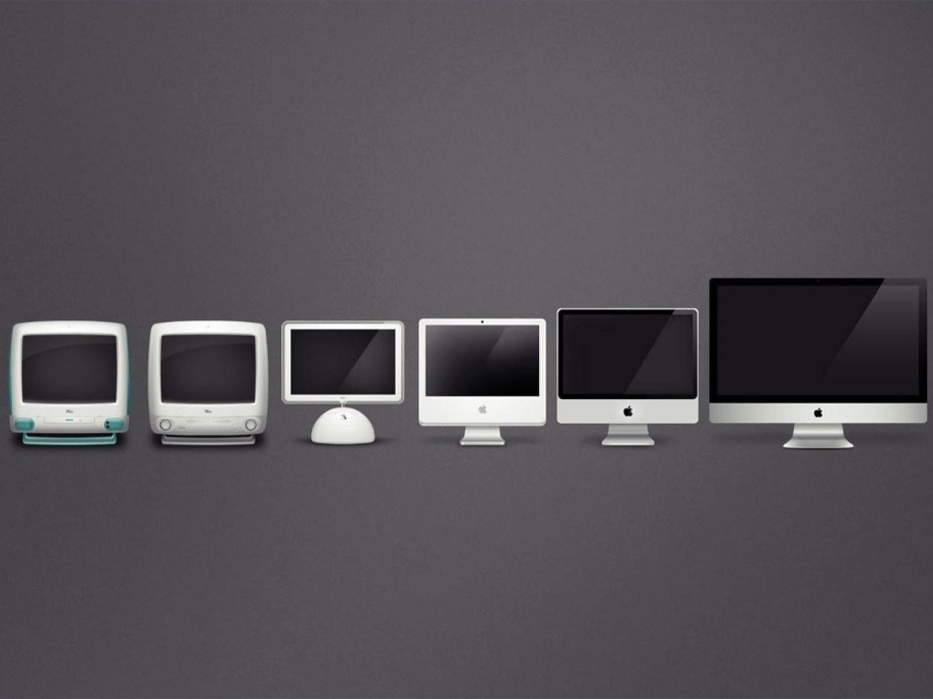

4. Personal Computers:

- 1970s-1980s: The invention of the microprocessor in the early 1970s (Intel 4004, 8008, 8080) marked the birth of the personal computer (PC) era. Companies like Apple (Apple I in 1976) and IBM (IBM PC in 1981) introduced affordable desktop computers that could be used by individuals and small businesses. This democratization of computing power sparked a revolution in personal productivity and entertainment.

5. Advancements in Storage and Connectivity:

- 1980s-1990s: Hard disk drives became standard storage devices, offering larger capacities and faster access times. Networking technologies such as Ethernet enabled computers to connect locally within offices and globally via the Internet, transforming how information was shared and accessed.

6. Mobile and Consumer Electronics:

- 1990s-2000s: The late 20th century saw the evolution of laptops, which combined computing power with portability. Advances in battery technology allowed laptops to operate independently of mains power for extended periods. Concurrently, mobile devices such as smartphones and tablets began to integrate computing capabilities with cellular communication, leading to the era of ubiquitous computing.

7. Multicore Processors and Specialized Hardware:

- 2000s-2010s: As Moore’s Law continued to hold true (albeit with challenges in transistor scaling), processors evolved to include multiple cores on a single chip. This facilitated parallel processing and improved performance for multitasking and specialized tasks such as graphics processing (GPUs) and artificial intelligence (AI). Specialized hardware accelerators emerged to handle specific computational tasks more efficiently, driving advancements in fields like machine learning and scientific computing.

8. Internet of Things (IoT) and Wearables:

- 2010s-2020s: The proliferation of connected devices in the IoT expanded the scope of computing beyond traditional computers. Smart home devices, industrial sensors, and wearable electronics (smartwatches, fitness trackers) integrated computing power into everyday objects, enhancing convenience and efficiency. These devices often leverage cloud computing for data storage and processing, enabling seamless connectivity and real-time analytics.

9. Quantum Computing:

- Emerging: Quantum computing represents a paradigm shift in computing technology. Instead of classical bits (which can be either 0 or 1), quantum computers use quantum bits or qubits, which can exist in multiple states simultaneously due to quantum superposition and entanglement. Quantum computers have the potential to solve complex problems exponentially faster than classical computers, making them promising for applications in cryptography, optimization, and scientific simulations.

Conclusion:

The evolution of computer hardware has been characterized by continuous innovation, driven by the quest for increased processing power, efficiency, and connectivity. From the room-sized mainframes of the 1940s to the powerful smartphones and quantum computers of today, computing technology has transformed every aspect of modern life. Looking ahead, the future of computer hardware promises further advancements in quantum computing, AI-driven architectures, and sustainable computing solutions, paving the way for new possibilities and challenges in the digital age.