Information Technology (IT) has come a long way from its early beginnings to become an integral part of modern life. Its evolution is marked by significant milestones that have shaped the way we process, store, and communicate information. This journey from rudimentary tools to sophisticated digital systems is fascinating and underscores the rapid pace of technological advancement.

Early Beginnings

The roots of IT can be traced back to ancient times when humans first developed tools to aid in computation and information management. One of the earliest known tools is the abacus, used by the Sumerians around 2300 BC for arithmetic calculations. This simple device laid the foundation for the development of more complex computing machines.

In the 17th century, Blaise Pascal invented the Pascaline, a mechanical calculator that could perform basic arithmetic operations. This was followed by Gottfried Wilhelm Leibniz’s Step Reckoner, which could multiply and divide. These early mechanical calculators were precursors to modern computers, demonstrating the human desire to automate and simplify computation.

The Mechanical Age

The 19th century saw significant advancements with Charles Babbage’s conceptual design of the Analytical Engine, which is considered the first mechanical computer. Although never completed during his lifetime, the design included features such as a central processing unit (CPU) and memory, concepts that are fundamental to modern computers.

Ada Lovelace, a mathematician and writer, worked with Babbage and is often regarded as the first computer programmer. She recognized that the Analytical Engine could be used for more than just calculations, envisioning its potential to process symbolic information, which foreshadowed modern computing’s versatility.

The Electronic Era

The early 20th century marked the transition from mechanical to electronic computing. During World War II, the need for rapid and accurate computation led to the development of the first electronic computers. One of the most notable examples is the Electronic Numerical Integrator and Computer (ENIAC), completed in 1945. ENIAC was capable of performing complex calculations much faster than any previous machine, heralding the beginning of the electronic computing era.

The invention of the transistor in 1947 by John Bardeen, Walter Brattain, and William Shockley revolutionized electronics and computing. Transistors replaced bulky vacuum tubes, leading to smaller, more efficient, and more reliable computers. This breakthrough paved the way for the development of integrated circuits in the 1960s, which further miniaturized and enhanced computing power.

The Computer Revolution

The 1970s and 1980s witnessed the advent of personal computers (PCs), bringing computing power to individuals and small businesses. The introduction of microprocessors, such as Intel’s 4004, enabled the development of affordable and compact PCs. Companies like Apple, IBM, and Microsoft played pivotal roles in popularizing personal computing, with products like the Apple II, IBM PC, and Microsoft Windows becoming household names.

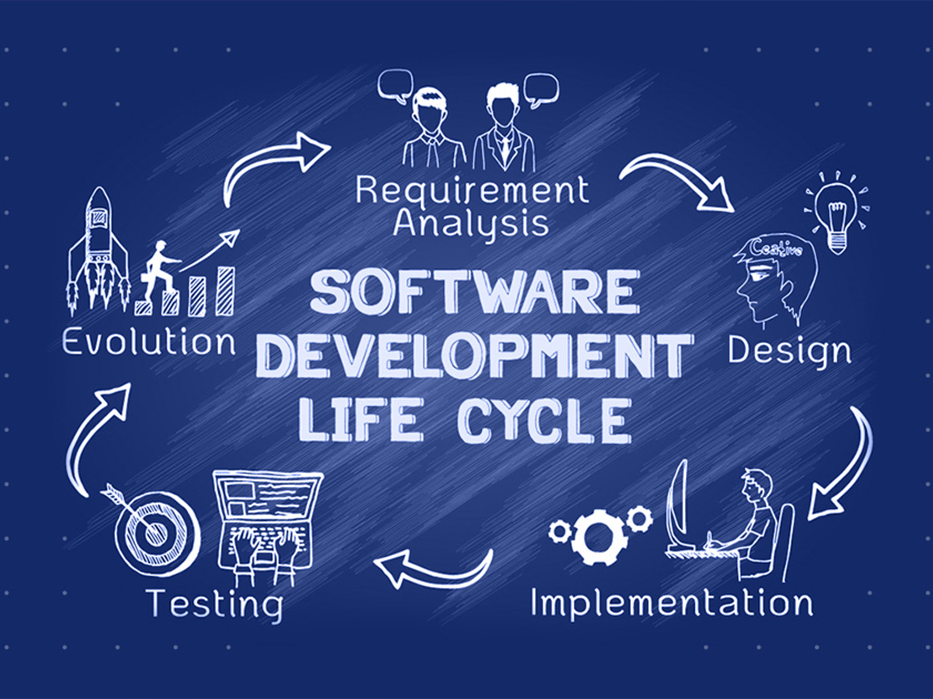

During this period, software development also advanced rapidly. Operating systems, programming languages, and applications became more sophisticated, allowing users to perform a wide range of tasks. The emergence of the Internet in the 1990s further transformed IT, connecting people and devices worldwide and enabling the proliferation of the World Wide Web.

The Digital Age

The late 20th and early 21st centuries have been characterized by the digital revolution, marked by the convergence of computing, telecommunications, and media. Mobile technology, cloud computing, big data, and artificial intelligence (AI) have become central to IT, driving innovation and changing how we live and work.

Smartphones and tablets have made computing portable and accessible, while cloud computing has revolutionized data storage and processing, enabling scalable and flexible IT solutions. The rise of big data analytics has allowed organizations to harness vast amounts of information to gain insights and make data-driven decisions. AI and machine learning are pushing the boundaries of what is possible, from autonomous vehicles to advanced medical diagnostics.

Future Trends

As we look to the future, IT continues to evolve at a rapid pace. Emerging technologies such as quantum computing, blockchain, and the Internet of Things (IoT) promise to bring about new capabilities and challenges. Quantum computing could revolutionize problem-solving and data processing, while blockchain offers secure and transparent ways to handle transactions and data. IoT is set to connect billions of devices, creating smart environments and enhancing automation.

Conclusion

The history and evolution of Information Technology reflect humanity’s relentless pursuit of knowledge and efficiency. From ancient tools to modern digital systems, IT has transformed every aspect of our lives, driving progress and innovation. As technology continues to advance, the future of IT holds endless possibilities, promising to further revolutionize how we interact with the world and each other.